Most brands say they’re testing UGC ads… but if you look closely, they’re really just posting a bunch of random videos and hoping one magically takes off.

One day it’s a “review-style” ad. The next day it’s a founder story. Then it’s a hard discount angle. Then it’s a totally different creator with a totally different vibe. Different hook, different script, different length, different product claims… and somehow we’re surprised the results are inconsistent.

That’s the real reason most UGC testing fails: you’re changing too many variables at the same time.

So when something works, you don’t know why. And when it flops, you don’t know what to fix.

This article gives you a simple, repeatable 7-day testing framework you can run every single week to find UGC ads that actually scale, without wasting budget or guessing your way through it.

In the next few minutes, you’ll see exactly how to test:

hooks (what stops the scroll),

angles (what makes people care),

iterations (how you turn one ad into ten),

budget splits (so you don’t overspend on trash),

and winner rules (so you know what to scale and what to kill).

Let’s break it down.

What “Winning” Actually Means in UGC Testing

If you don’t define what a “winner” is, you’ll end up doing what most brands do:

You’ll chase vanity metrics (cheap clicks, high CTR, “wow this video looks good”), scale something too early… and then wonder why it doesn’t actually convert.

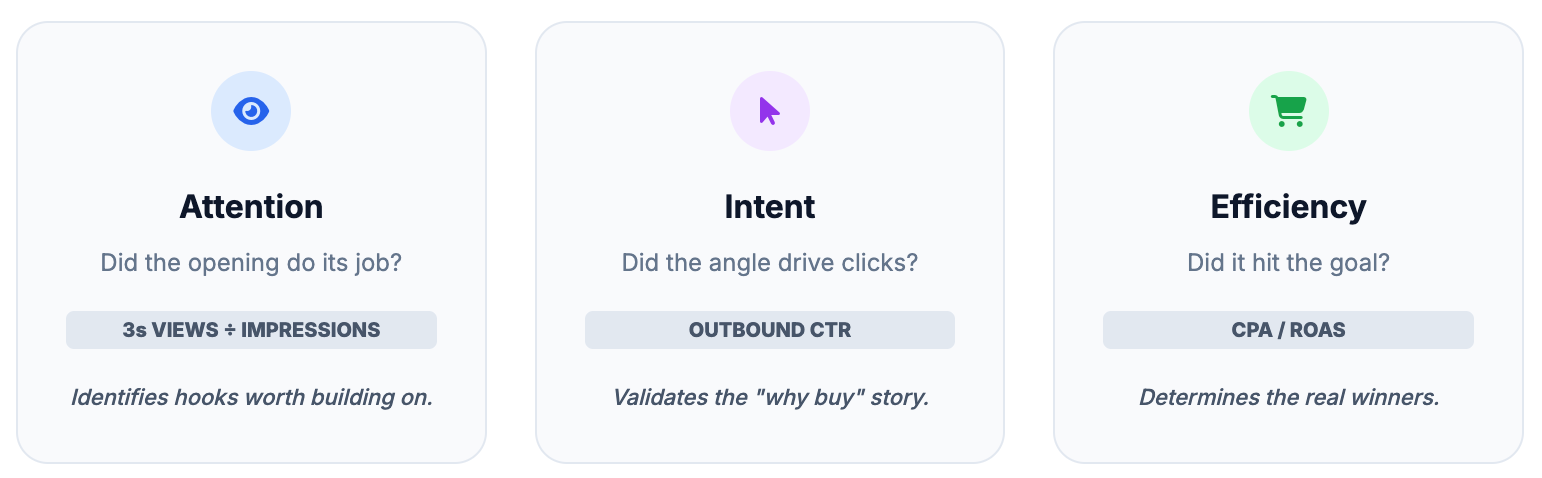

In UGC testing, a “winner” usually shows up in 3 levels:

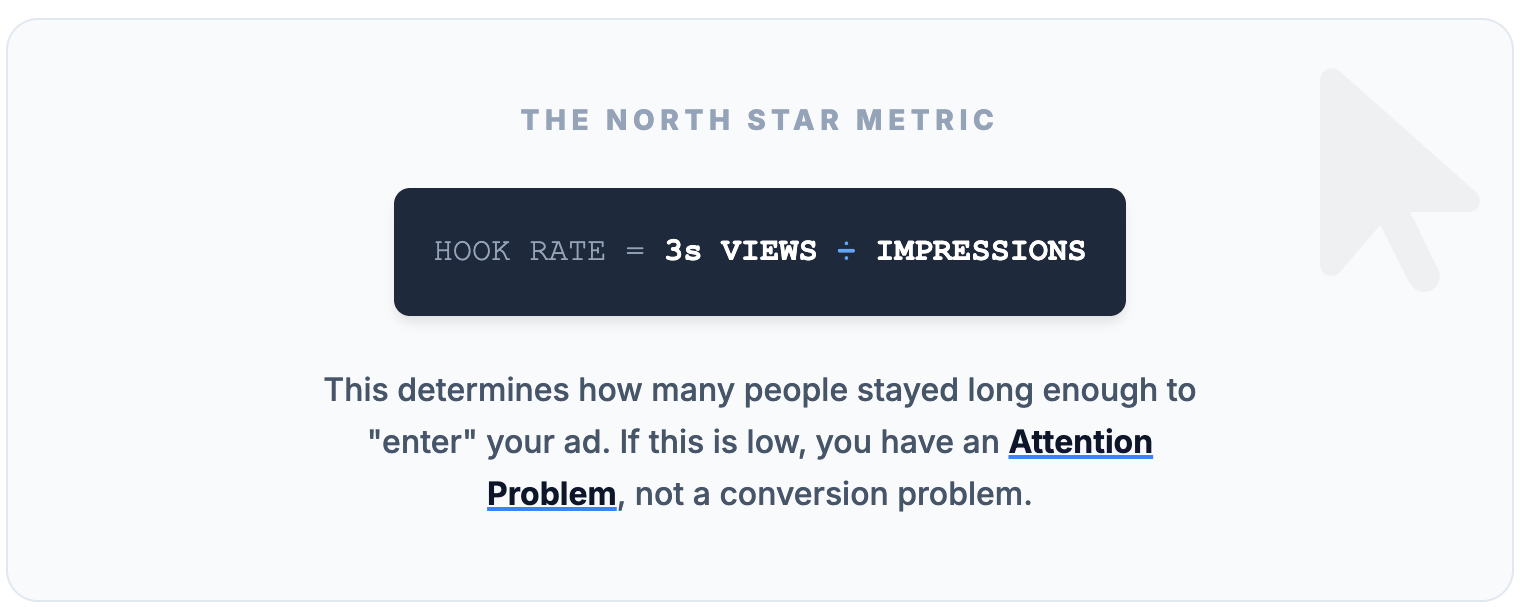

1) Attention Winner (Strong Hook / Thumbstop)

This is your scroll-stopper.

It doesn’t mean the ad is profitable yet — it just means your first 1–2 seconds are doing their job.

A common way to measure this is hook rate / thumbstop rate, usually calculated as:

3-second video views ÷ impressions

If your hook is weak, everything downstream suffers because people never get far enough to hear your message.

2) Engagement Winner (Holds + Clicks)

This is the ad that keeps people watching and gets the click.

It usually means:

- your angle makes sense

- the pacing is solid

- the “proof” feels believable

- the creator delivery feels natural

This is the stage where “good vibes” aren’t enough anymore — the video needs to build intent, not just attention.

3) Conversion Winner (CPA / ROAS Target)

This is the real winner.

It produces purchases at a CPA that makes sense for your business (or a ROAS you can scale), and it doesn’t fall apart the second you spend more.

A lot of marketers mention this exact trap: an ad can look amazing at the top of the funnel (views/CTR) but still fail to sell.

The real goal: find scalable patterns (not one lucky video)

One “winning video” is nice… but it’s fragile.

What you actually want is a winning concept you can reproduce across:

- different creators

- different hook variations

- different edits / pacing

- different formats (talking head, demo, review)

That’s when you stop relying on luck and start building a system.

✅ Mini-callout box:

“A winning ad is a repeatable concept that performs across multiple variants.”

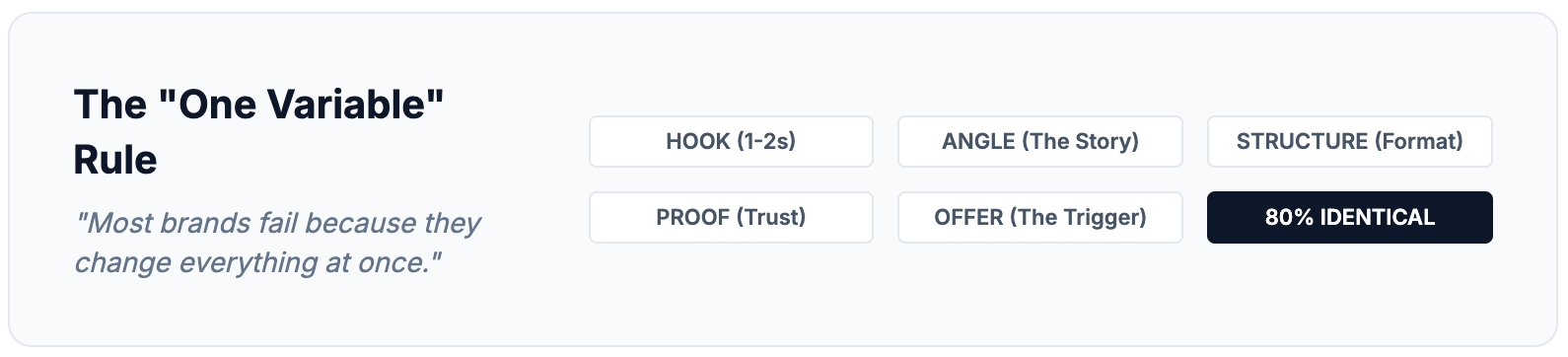

The Core Principle: Test One Thing at a Time

Most brands don’t fail UGC testing because they lack content. They fail because they change everything at once, then try to guess what caused the result.

One day it’s a new creator. The next day it’s a different script. Then a new offer, new angle, new edit, new length… and suddenly you’re looking at numbers that don’t tell you anything useful.

That’s why this framework works: it keeps your tests clean.

The rule is simple: keep 80% of the ad identical, and only change one variable at a time. Meta also recommends testing one variable at a time if you want more conclusive results, because it’s the only way to understand what actually made the performance go up (or crash).

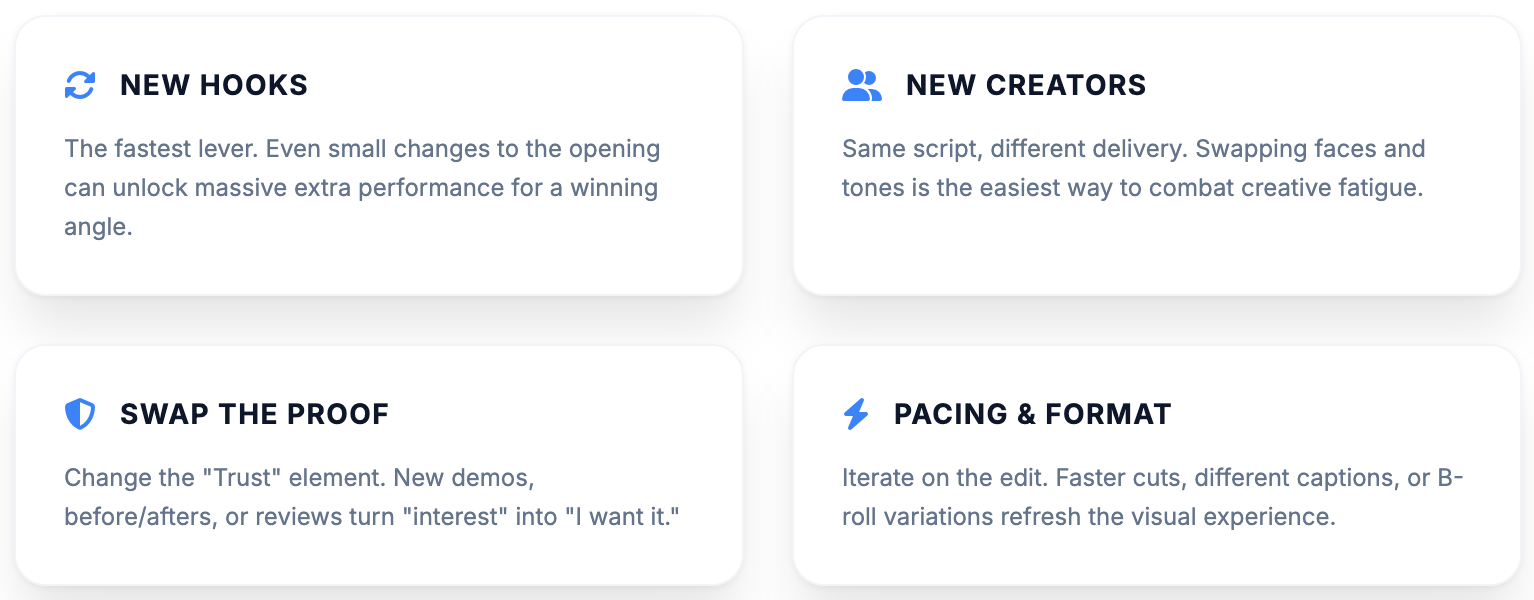

In UGC, the most common “variables” you can test are your hook, your angle, your structure, your proof, and your offer.

Your hook is the first 1–2 seconds — the part that stops the scroll. If it doesn’t hit fast, nobody stays long enough to hear the rest.

Your angle is the reason someone should care. Same product, totally different angle, totally different buyer reaction. It’s basically the story your ad is telling: why this exists, who it’s for, and what problem it solves.

Your structure is the format you use to deliver the message. Some ads work best as a review, others as a demo, others as problem/solution, and some win as a simple tutorial or storytime. Structure affects how easy it is to understand the product — and how long people watch.

Your proof is what makes the ad believable. This is where UGC really shines: demos, before/after, testimonials, real comments, screenshots, even “I didn’t believe it until…” moments. Proof is what turns interest into trust.

And your offer is the decision trigger. Discounts, bundles, free shipping, bonuses — the offer can change conversion behavior so much that it should be treated like its own test, not something you swap randomly.

If you follow this rule (80% identical + one change at a time), your testing stops being a guessing game. You’ll know what worked, you’ll know why it worked, and when you find a winner, you’ll actually be able to repeat it — instead of hoping lightning strikes twice.

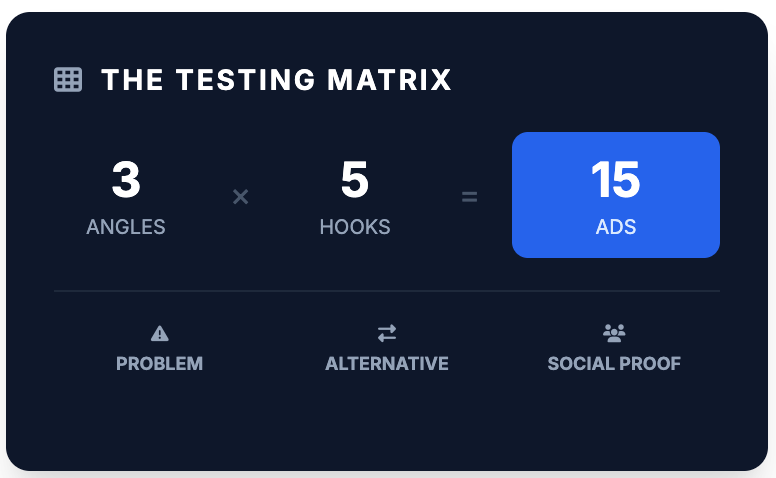

Day 0 (Prep): Build a Testing Matrix

Before you spend a single dollar, you need a simple testing matrix — because “let’s just test some UGC” usually turns into chaos fast.

The goal of the matrix is to force structure. You want a clear plan for what you’re testing (hooks vs angles), how many creatives you’re launching, and what stays the same so your results actually mean something.

The cleanest setup is this:

Pick 3 angles, write 5 hooks for each angle, and you’ll end up with 15 ads (3 × 5). That’s enough variety to find patterns, but not so much that you can’t manage it.

And yes — people who test at scale on Reddit keep repeating the same principle: run controlled variations (3–4 at a time), keep variables consistent, and test one element at a time so you can trust the outcome.

Your 3 Angles (the “why buy” stories)

Think of an angle as the reason someone should care about the product.

For example, you might test:

- Problem → solution (fix the pain fast)

- Better alternative (the old way sucks, this is easier)

- Social proof / trend (people like me are already using it)

Keep the angle consistent inside a test batch — otherwise you’re comparing completely different messages.

Your 5 Hooks (the first 1–2 seconds)

Hooks are where UGC ads win or die. They’re not meant to explain everything — they’re meant to stop the scroll.

A simple way to brainstorm is using quick hook frameworks like “This you?”, which Social Cat even breaks down as a punchy, relatable opener you can pair with a demo or story right after.

What stays the same (so you’re not guessing)

Once your matrix is set, lock the rest.

Keep your:

- video length (roughly)

- CTA

- landing page

- core script structure

- placement settings (as much as possible)

Meta’s A/B testing guidance basically supports this approach: you compare performance by changing variables like creative, audience, or placement — but the key is controlled comparisons, not random changes everywhere.

If you do this right, Day 1 becomes easy: you launch your matrix, and you’re instantly testing in a way that produces real learning, not “I think this creator is better.”

Day 1–2: Hook Testing (Fast Filtering)

The fastest way to find winners is to start with hooks, because hooks decide whether your ad even gets a chance to sell.

A hook is the opening line or moment that grabs attention and makes someone keep watching — Social Cat describes it as the “bait” that pulls people into your content. If the hook doesn’t land in the first 1–2 seconds, your angle, your proof, your offer… none of it matters, because people are already gone.

This is why Days 1–2 are about filtering, not scaling.

You’re going to take one angle (same story, same structure) and test multiple hooks against it. The point isn’t to find the perfect full ad yet — it’s to find the openings that consistently stop the scroll, so you can build winners on top of them later.

A simple starting setup is 10–15 hook variations, keeping everything else as similar as possible. Reddit advertisers talk about this exact idea: isolate one variable (like the hook) so the test isn’t just expensive guessing.

To judge hook performance, the most common metric is hook rate / thumbstop rate, which is usually calculated as:

3-second video views ÷ impressions

This number tells you how many people actually stayed long enough to “enter” the ad. If it’s low, it’s not a conversion problem — it’s an attention problem.

By the end of Day 2, you’re not trying to pick “the final winner.” You’re just trying to identify the top 20–30% hooks that clearly outperform the rest. Once you have those, you’ll reuse them in the next phase where it actually gets interesting: testing angles.

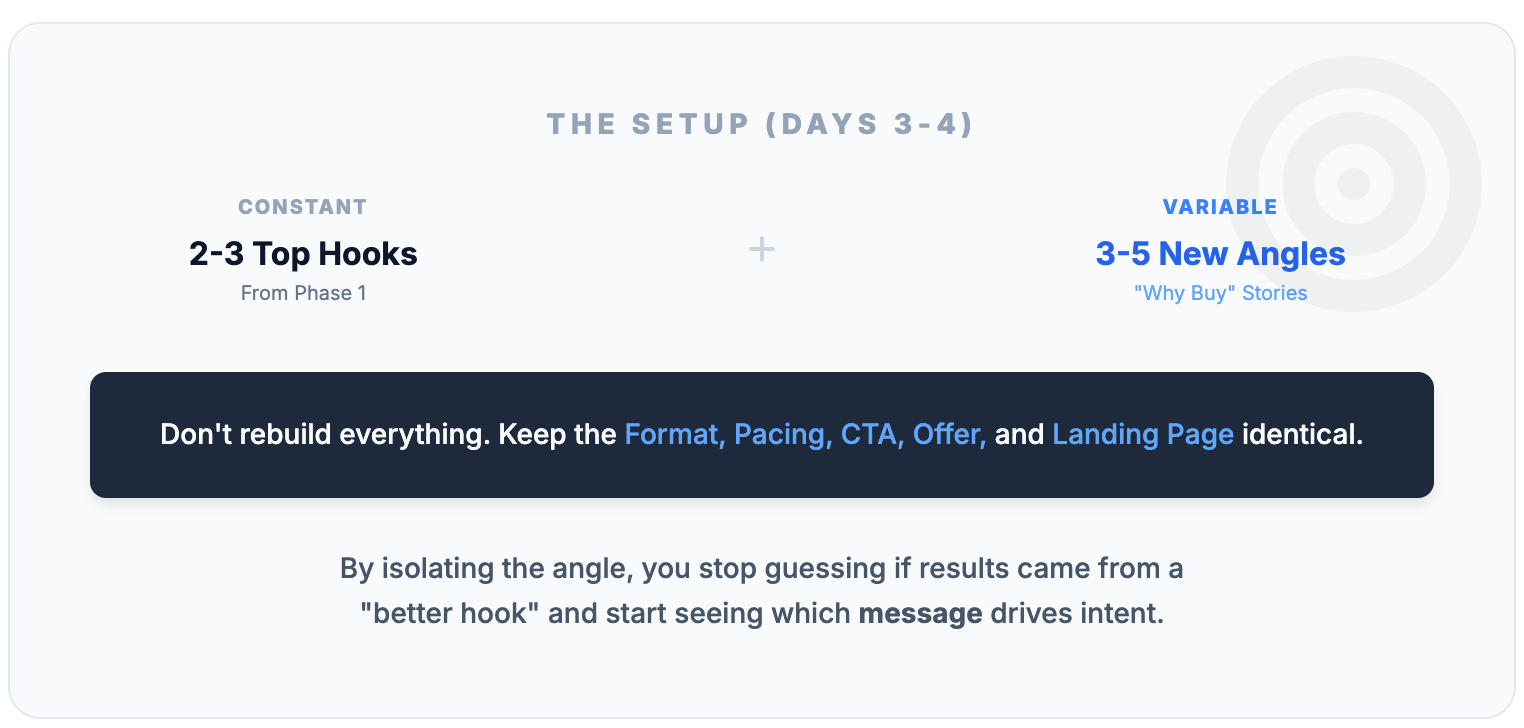

Day 3–4: Angle Testing (What Story Actually Sells?)

Once you’ve filtered down to a few strong hooks, the next question becomes way more important:

What’s the message that actually makes people buy?

Because hooks are great for attention… but angles are what drive intent.

An angle is the “why buy” story behind the product. Same product, completely different angle, completely different outcome. And this is where a lot of brands get stuck — they find a hook that gets views, but the story doesn’t create enough desire to convert.

For Days 3–4, you’re going to keep your audience and delivery conditions consistent, and test angles in a clean way. A super common structure (and one you’ll see recommended a lot by experienced media buyers) is to group ads by angle, while keeping the rest identical.

Here’s the simplest setup:

You take your top 2–3 hooks from Days 1–2, and you apply them to 3–5 different angles. That way, you’re not guessing whether your results came from a “better hook” — you’re letting the hook stay strong while the angle is what changes.

And this part matters: don’t rebuild everything for each angle. Keep the same format, same pacing, same CTA, same offer, same landing page. Meta’s own A/B testing guidance is built around comparing strategies by selecting variables intentionally — not mixing changes all over the place.

By the end of Day 4, you’ll usually see a pattern: one angle starts producing better clicks and better downstream behavior (adds to cart / purchases), even if it wasn’t the flashiest video.

That’s your real win.

Because once you find a winning angle, you can scale it fast by turning it into iterations, new creators, new proof, new pacing, while keeping the core message the same.

Day 5–6: Iterations (Turn a Winner Into a Machine)

By Day 5, your job is no longer “test more random UGC.”

Your job is to take what’s already working (a strong hook + a strong angle) and multiply it.

Because this is where real scaling comes from: not from finding one lucky ad… but from turning one winning concept into 10–20 variations that keep performing.

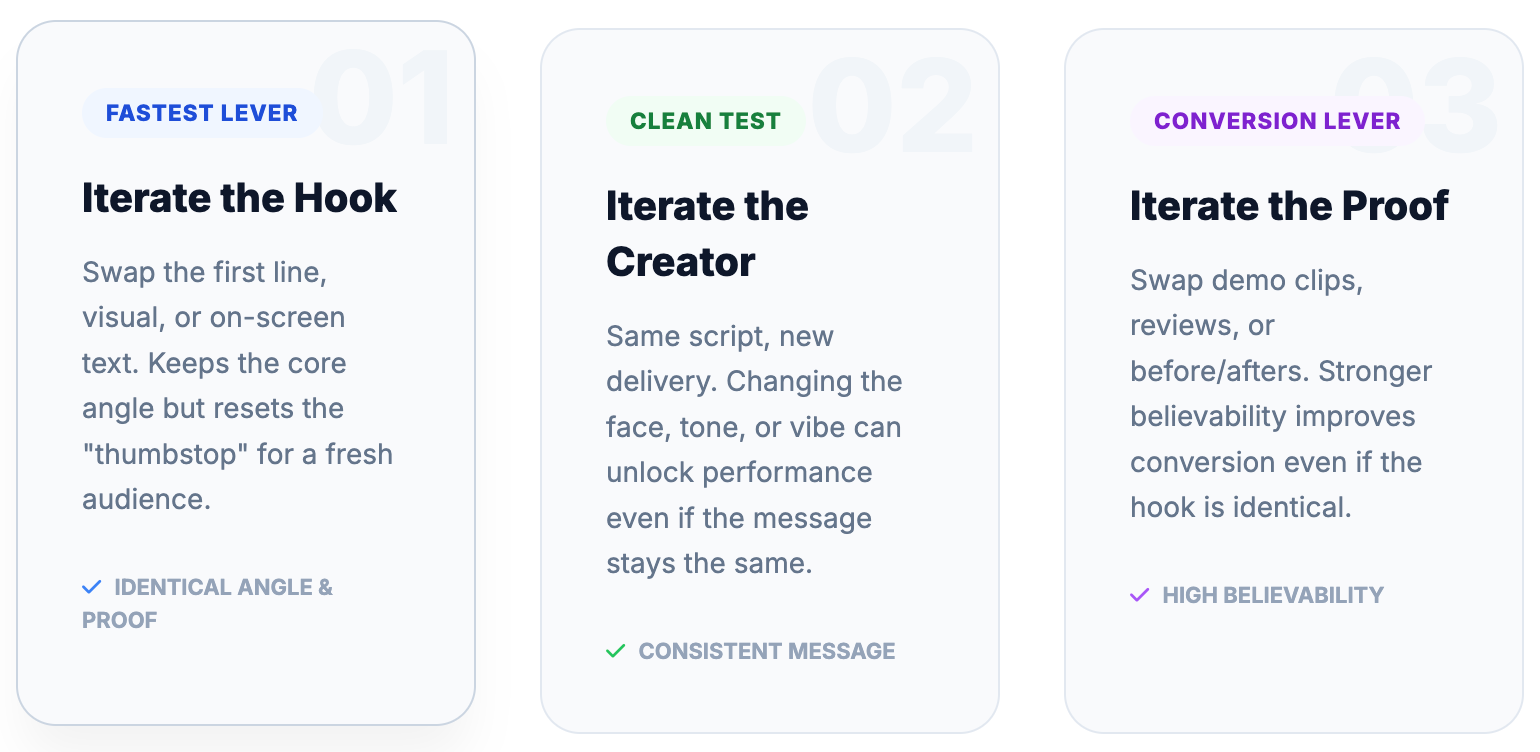

A lot of experienced media buyers describe this as creative iteration, once you find a concept that hits, you rebuild it in multiple versions so you can keep performance stable even as fatigue kicks in.

The key is that you’re not “reinventing” the ad. You’re keeping the winning idea intact and tweaking one element at a time, so the concept stays recognizable while the execution stays fresh.

Here are the highest-leverage iterations to test on Day 5–6:

Start with new hooks (even small changes). The hook is still the fastest lever you can pull, and you’ll often find that a winning angle becomes even stronger when you match it with new openings.

Then test new creators using the same script. UGC performance can swing hard depending on delivery — tone, pacing, trust, and how “real” the video feels. Swapping creators is one of the simplest ways to get fresh performance without changing the core message.

After that, swap the proof. Keep the same story, but change what makes it believable: a different demo clip, a stronger before/after, a review screenshot, a “day 1 vs day 7” result, or even a clearer “here’s how it works” moment. Proof is often the difference between “interesting” and “I want it.”

Finally, iterate pacing and format details — captions, on-screen text, faster cuts, slower cuts, different b-roll, shorter/longer versions. Reddit threads about iteration consistently mention these types of changes because they’re easy to produce and often unlock extra performance without changing the core concept.

If you do this right, you finish Day 6 with something way more valuable than “a winner.”

You finish with a cluster of ads that all share the same winning concept — and that’s what makes scaling safe.

Day 7: Winner Selection + Scaling Plan

By Day 7, you should have something way more valuable than “a few UGC videos that didn’t totally flop.”

You should have data-backed patterns: which hooks stop the scroll, which angles drive intent, and which variations actually convert. Now the job is to make a clear decision and turn that learning into a scaling plan.

Start by separating your results into three buckets: attention, engagement, and conversion. An ad that gets strong early attention (hook winners) isn’t automatically a business winner, it just means the opening did its job. Your “hook” is the element that grabs attention and pulls people into the message, and that’s exactly how Social Cat defines it too.

For attention, look at metrics like thumbstop / hook rate (commonly calculated as 3-second video plays ÷ impressions).

This quickly tells you which openings are worth building on.

Then zoom out and pick your real winners: the ads that actually drive purchases at a CPA you can live with (or a ROAS that makes sense). Reddit advertisers who’ve scaled spend heavily often describe their system the same way: test fast, find winning angles, then scale by iterating the winners instead of constantly reinventing the wheel.

Once you’ve picked 1–3 winners, scale them in a way that doesn’t break the results. The simplest rule is: don’t scale one ad… scale the concept. That means taking the winning angle and producing multiple variants (new creators, new proof, slight hook changes), so performance stays stable when one version fatigues.

And one important reminder while you scale: keep your testing logic clean. Meta’s own A/B testing best practices emphasize that your test is more conclusive when ad sets are identical except for the variable you’re testing — the same mindset applies here when you’re expanding winners into more variants.

Budget Splits That Make Testing Predictable

UGC testing gets messy when you don’t have a clear budget rule. You start with “just testing a few ads,” then suddenly you’ve spent $500 on 12 videos… and you still can’t confidently say what works.

The fix is simple: split your budget into two jobs.

One part exists to buy learning (testing hooks + angles). The other part exists to buy performance (scaling the winners). If you don’t separate these, you’ll either overspend on bad ideas or underfund the ads that are actually working.

This matches the same logic Meta uses for A/B testing in general: keep tests controlled and structured so you can isolate what’s driving results, instead of letting everything blur together.

A good default split: 70% testing / 30% scaling

If you’re still hunting for winners, prioritize testing. You need enough spend per creative to get a real read, especially when you’re comparing hooks (your opening “bait” that gets people to stop scrolling and keep watching).

Here’s what this looks like in practice.

If you’re spending $20–$100/day, keep it lean. Run fewer ads, but make the tests clean.

For example, at $50/day, you might run 7 hook tests at $5/day (that’s your learning budget), and keep $15/day to push the best performer so you don’t lose momentum. Reddit testers often recommend structured, controlled variations like this rather than throwing dozens of ads into the account with no plan.

If you’re spending $100–$500/day, you can do it properly at volume. The goal here is to feed the machine: consistently test new hooks and angles while scaling the winners.

At $300/day, a common split is $180 testing (12–18 creatives), and $120 scaling (2–4 winners). That’s enough budget to learn fast, without your “winning” ads getting stuck at $10/day forever.

One important detail: testing budgets only work if your spend is distributed fairly. A lot of people run tests in ways where the platform barely spends on some creatives, which makes it look like you tested… but you didn’t. That’s why you’ll see experienced advertisers suggest setups that force even spend between variations when comparing hooks or angles.

Once you lock this budget logic in, testing becomes predictable. You stop feeling like you’re “wasting money on UGC,” and you start treating testing like a weekly system: spend X to learn, spend Y to scale.

Testing Setup (Campaign Structure That Doesn’t Confuse You Later)

Your testing framework can be perfect… and still fail if your campaign setup is messy.

Why? Because Meta will often push spend toward whatever it thinks will win early, which means some ads barely get delivery. Then you look at results and think you “tested,” but in reality you just let the algorithm pick favorites.

So your goal here is simple: set up your tests so spend is fair, comparisons are clean, and decisions are easy. Meta’s own A/B testing best practices also point to this same idea: keep tests structured, measurable, and focused (ideally testing one variable at a time).

Here are 3 campaign structures that work (from simplest to most controlled):

Structure A: One Ad Set, Multiple Ads (fast + simple)

This is the “get it live” setup.

You run one campaign, one ad set, and load your different UGC videos inside as separate ads. It’s easy to manage and works well when you’re doing broad testing and just want early signals.

The tradeoff is that Meta may not distribute spend evenly across all creatives, so some hooks won’t get a fair shot.

Structure B: One Ad Set per Angle (cleanest for angle testing)

This is the setup most people end up using once they want cleaner learning.

Each ad set represents one angle, but the audience settings stay the same across all ad sets. That way, you can see which “why buy” story performs best without audience differences skewing the result. This is exactly how a lot of experienced testers describe angle testing on Reddit: identical settings, ads grouped by angle.

Inside each angle ad set, you place a few ads with the same structure but different hooks or creators.

Structure C: One Ad Set per Hook (most controlled for hook testing)

If you’re serious about hook testing, isolate hooks completely.

Some advertisers will split each hook into its own ad set with equal budgets to force fair delivery and see which opener actually earns attention.

This is especially useful in the first 48 hours, because hooks are the “bait” that gets someone to stop scrolling and keep watching.

It’s more work, but the results are usually clearer.

Hook Library: 25 UGC Hook Templates You Can Steal

If you’re testing UGC ads, hooks aren’t optional — they’re the gatekeeper. The first 1–3 seconds decide whether people keep watching, click, or scroll past you like you don’t exist.

And here’s something that shows up constantly in real creator + media buyer conversations: most people only use one hook, when they really need multiple hooks (visual + verbal + text) to stop the scroll.

So instead of overthinking it, use a hook bank like this and test fast.

25 Hook Templates (mix & match)

1) “This you?”

“This you? You keep doing ___ but it never works.” (Social Cat even has this as a defined hook format.)

2) “Stop doing this…”

“Stop doing ___ if you actually want ___.”

3) “Nobody talks about this…”

“Nobody talks about this, but ___ is why you’re struggling.”

4) “I wish I knew this sooner…”

“I wish someone told me this before I wasted money on ___.”

5) “The reason you’re not getting results…”

“The reason ___ isn’t working for you is actually simple.”

6) “Here’s the mistake…”

“You’re making this mistake with ___ and it’s costing you.”

7) “If you have ___, you need this.”

“If you deal with ___, you’re going to want to see this.”

8) “Watch this…” (demo hook)

“Watch what happens when I do this in real time…”

9) “Don’t buy this unless…”

“Don’t buy this unless you want ___.”

10) “I was skeptical…”

“I didn’t believe this would work… until I tried it.”

11) “This is why everyone is switching…”

“This is why everyone is switching from ___ to ___.”

12) “Real results, no fluff.”

“Here’s what happened after ___ days of using this.”

13) “Quick comparison.”

“Left side is ___, right side is ___… spot the difference.”

14) “3 reasons…”

“3 reasons this is better than ___.”

15) “The lazy way to ___.”

“This is the lazy way to ___ (and I’m obsessed).”

16) “POV: you finally fix ___.”

“POV: you finally solve ___ without doing ___.”

17) “I found a hack…”

“I found a hack for ___ and it’s actually working.”

18) “I’m not gatekeeping this.”

“I’m not gatekeeping this because it’s too good.”

19) “What I ordered vs what I got.”

“What I ordered vs what I got… and I’m shocked.”

20) “If you’re about to buy ___, watch this first.”

“If you’re about to buy ___, pause and watch this.”

21) “You’re using it wrong.”

“You’re using ___ wrong. Do this instead.”

22) “The easiest upgrade.”

“This is the easiest upgrade I’ve made all year.”

23) “This solved my ___ in 10 seconds.”

“I did this once and it instantly fixed ___.”

24) “This should be illegal.”

“This is so good it should be illegal.”

25) “Here’s the truth…”

“Here’s the truth about ___ that nobody wants to tell you.”

If you want to test this properly, take one angle, and create 5 hook variations using the same core ad (same structure, same offer). People on Reddit running Meta ads say the best way to test hooks is literally to keep the same UGC video and swap only the first seconds.

Angle Library: 10 Angles That Keep Working for UGC Ads

Once you’ve got a few hooks that stop the scroll, the next thing that decides whether people buy is your angle.

An angle is basically the way you frame the product so it feels instantly relevant. It’s not “what the product is.” It’s why someone should care right now.

And this matters a lot, because (as people keep saying in UGC + Meta ads threads) without a strong angle, no editing trick or creator can save the ad.

Here are 10 angles that consistently show up in winning UGC ads—and are easy to test in batches.

1) Problem → Solution (the “fix the pain” angle)

This is the classic “here’s what was annoying me… here’s what fixed it.”

It works because the viewer instantly self-identifies with the problem, and the product becomes the obvious next step. This angle shows up constantly in UGC testing discussions because it’s simple, direct, and converts well when the pain is real.

2) Demo-First (show it working immediately)

Instead of explaining, you show.

This angle is brutal in the best way: the product either looks useful in 2 seconds or it doesn’t. A lot of Reddit testers mention “demo-first” as one of their most reliable UGC angles because it reduces skepticism fast.

3) “Better Alternative” (old way vs new way)

This is the “I used to do ___, now I do ___” framing.

It works because it gives contrast. People understand value faster when they can compare it to something familiar, especially if the old way is annoying, expensive, or slow.

4) Social Proof (people like you already use this)

This is when the ad leans into validation: reviews, comments, “TikTok made me buy it,” or “everyone’s switching.”

It’s a top-tier angle when buyers need trust before purchase—plus it’s easy to produce because you can pull real proof from reviews/support tickets and turn them into ads.

5) “I Was Skeptical Until…” (anti-ad energy)

This is the angle that feels like honesty, not marketing.

It works because it matches how people talk in real life. They don’t say “this changed my life” instantly, they say “I didn’t believe it… but it actually works.” That natural tone is why UGC often beats polished brand ads.

6) The Specific Outcome (results-focused)

This angle sells the outcome, not the product.

It’s “clear skin,” “more energy,” “better sleep,” “saved 30 minutes,” “made my kitchen easier,” etc. When the outcome is specific, the buyer can picture the benefit immediately (which makes clicking feel logical).

7) “For People Who Hate ___” (identity + lifestyle)

This angle works because it’s not just a product—it’s a personality match.

“For people who hate cooking.”

“For people who can’t stick to routines.”

“For people who don’t want to spend $$$ on ___.”

It filters the audience in a good way: the right people feel called out, and the wrong people scroll away (which often improves efficiency).

8) “The Mistake You’re Making” (reframe)

This angle creates a pattern interrupt: “you’re doing it wrong.”

It works especially well when your product replaces a common habit, tool, or method. You’re not saying “buy this.” You’re saying “here’s why your current approach fails… here’s the better move.”

9) Education / Explainer (value-first)

This is when the creator teaches something useful, quickly.

It’s especially common with products that feel “too good to be true,” or need a bit of explanation to click. Even in AI UGC discussions, people point out that informational/problem-solution styles tend to work better than fake testimonial energy.

10) Format-Led Angles (skit, street interview, expert commentary)

Sometimes the “angle” is the format itself.

Especially lately, more advertisers are leaning into formats that feel native and entertaining instead of scripted testimonials. RevenueCat’s breakdown for UGC-style ads in apps highlights how value-first, feed-native formats can outperform predictable testimonial-style creative.

What matters most isn’t picking “the best angle” on paper. It’s testing angles in a way that’s clean and controlled—ideally in separate ad sets so delivery stays consistent.

Iteration Playbook: How to Get 20 Variations From 1 Winner

Once you find a winning hook + angle combo, the goal isn’t to “protect” that one ad like it’s rare gold.

The goal is to turn it into a repeatable system.

Because in real Meta accounts, winners don’t stay winners forever. Performance shifts, frequency climbs, and creative fatigue hits. The brands that scale consistently are the ones that can take a winning concept and produce variations fast — without changing the core message. Reddit advertisers talk about this all the time: when something works, you don’t restart from zero… you build around the winner.

Here’s the mindset: keep the concept identical, refresh the packaging.

Social Cat even describes the hook as the “bait” that pulls people in, which is why updating hooks is usually the highest leverage first move.

The 3 iteration levers that create the biggest gains

First, iterate the hook (small changes, big impact).

Same ad, same angle, same proof — but swap the first line, the first visual, or the first on-screen text. Many marketers recommend testing new hooks first when iterating a winning video because it’s the fastest way to get a fresh version without rebuilding the entire ad.

Second, iterate the creator (same script, different delivery).

You’ll be surprised how often the exact same concept performs differently with a new face, tone, pacing, or vibe. This is still a “clean” iteration because the message stays consistent — you’re just changing who delivers it.

Third, iterate the proof (same story, stronger believability).

Swap in a better demo clip, a before/after, a stronger review, a comment screenshot, or a more specific claim. When the proof feels more real, conversion rate usually improves even if the hook stays the same.

The easiest “20 variations” formula

If you want a simple way to scale output without overthinking it, use this:

Take 1 winning angle, then create:

- 2 hook versions

- 2 proof versions

- 5 creators

That’s 2 × 2 × 5 = 20 variations from one concept.

And importantly: you’re not making 20 brand-new ads. You’re making 20 versions of the same winning idea, which keeps your testing clean and your scaling safer. Reddit threads on scaling winners often recommend this kind of controlled iteration over “random new creative batches” because it’s easier to learn and easier to repeat.

Common Testing Mistakes That Kill Winning Ads

Most brands don’t “lose” because their product sucks.

They lose because their testing is messy, emotional, or inconsistent, and they end up killing good ads too early while scaling the wrong ones.

Here are the mistakes that quietly destroy UGC testing (even when the creatives look good).

You change too many variables at once

This is the biggest one.

If you swap the creator, the hook, the angle, the structure, the proof and the offer… you’re not running a test. You’re just rolling the dice.

Meta’s A/B testing approach is built around isolating a single variable so you can clearly understand what caused the difference in performance.

The exact same logic applies to UGC. Keep most of the ad identical, change one thing, and your results become usable.

You judge ads too early

A lot of people kill ads after a few thousand impressions because the CPA looks bad.

But early performance can be noisy, and some ads start slow, then stabilize once delivery finds the right pockets of people. Reddit threads from media buyers constantly mention this: you need enough spend for the ad to “prove itself” before you label it dead.

You pick winners based on CTR only

CTR can lie.

A hook can drive curiosity clicks without purchase intent, especially if the ad is entertaining or controversial. That’s why you’ll often see “high CTR, low conversion” ads in UGC.

Clicks matter, but the real winner is the one that produces purchases at a CPA that makes sense for your margins.

You test with budgets too small to learn

If you launch 15 creatives but spend $2/day on each, you might technically be “testing”… but you’re not getting enough signal for the results to mean anything.

This is why the testing budget split matters. If you want clear learning, each creative needs enough spend to get a fair chance.

You let the algorithm “test” for you

When you dump a bunch of creatives into one ad set, Meta will often push spend toward a few early favorites. Then you end up with 2 ads that got spend, and 10 ads that barely ran.

Your structure should make delivery fair, especially during hook testing and angle testing. Meta’s A/B test framework exists for a reason: equal conditions produce real conclusions.

You scale one ad instead of scaling the concept

This one is sneaky.

Even if you find a winner, you can’t rely on it forever. The real move is to scale the angle + hook pattern by producing multiple variations (new hooks, creators, proof, pacing) — so your performance doesn’t collapse when the original ad fatigues.

That’s how you build a creative machine, not a one-hit wonder.

Your 7-Day Testing Checklist (Copy/Paste)

If you want to run this framework without overthinking it, here’s the exact weekly checklist.

Use it like a routine: run it every 7 days, collect patterns, build a library of winners.

Day 0 — Prep

Choose 3 angles. Write 5 hooks for each one.

Build your matrix: 3 angles × 5 hooks = 15 ads.

Lock what stays the same: landing page, offer, CTA, format, and pacing (as much as possible). Clean testing = clean learning.

Day 1–2 — Hook Testing

Pick one angle and test hooks against it.

Launch 10–15 variations, changing only the first 1–2 seconds.

Cut the bottom performers and keep the top 20–30% hooks.

Day 3–4 — Angle Testing

Take your top 2–3 hooks and apply them to 3–5 angles.

Keep the structure identical so you’re actually testing messaging, not random creative changes.

Pick the angle that consistently drives intent and conversions.

Day 5–6 — Iterations

Turn the winning hook + angle into variations.

Iterate the highest-leverage elements:

new creators, new proof, small hook changes, pacing changes.

Keep the core concept the same.

Day 7 — Winner Selection + Scaling

Pick 1–3 winners based on conversion performance (CPA / ROAS target), not just CTR.

Scale the concept by launching multiple variants, don’t bet everything on one video.

Table of content

- What “Winning” Actually Means in UGC Testing

- The Core Principle: Test One Thing at a Time

- Day 0 (Prep): Build a Testing Matrix

- Day 1–2: Hook Testing (Fast Filtering)

- Day 3–4: Angle Testing (What Story Actually Sells?)

- Day 5–6: Iterations (Turn a Winner Into a Machine)

- Day 7: Winner Selection + Scaling Plan

- Budget Splits That Make Testing Predictable

- Testing Setup (Campaign Structure That Doesn’t Confuse You Later)

- Hook Library: 25 UGC Hook Templates You Can Steal

- Angle Library: 10 Angles That Keep Working for UGC Ads

- 1) Problem → Solution (the “fix the pain” angle)

- 2) Demo-First (show it working immediately)

- 3) “Better Alternative” (old way vs new way)

- 4) Social Proof (people like you already use this)

- 5) “I Was Skeptical Until…” (anti-ad energy)

- 6) The Specific Outcome (results-focused)

- 7) “For People Who Hate ___” (identity + lifestyle)

- 8) “The Mistake You’re Making” (reframe)

- 9) Education / Explainer (value-first)

- 10) Format-Led Angles (skit, street interview, expert commentary)

- Iteration Playbook: How to Get 20 Variations From 1 Winner

- Common Testing Mistakes That Kill Winning Ads

Looking for UGC Videos?

Table of content

- What “Winning” Actually Means in UGC Testing

- The Core Principle: Test One Thing at a Time

- Day 0 (Prep): Build a Testing Matrix

- Day 1–2: Hook Testing (Fast Filtering)

- Day 3–4: Angle Testing (What Story Actually Sells?)

- Day 5–6: Iterations (Turn a Winner Into a Machine)

- Day 7: Winner Selection + Scaling Plan

- Budget Splits That Make Testing Predictable

- Testing Setup (Campaign Structure That Doesn’t Confuse You Later)

- Hook Library: 25 UGC Hook Templates You Can Steal

- Angle Library: 10 Angles That Keep Working for UGC Ads

- 1) Problem → Solution (the “fix the pain” angle)

- 2) Demo-First (show it working immediately)

- 3) “Better Alternative” (old way vs new way)

- 4) Social Proof (people like you already use this)

- 5) “I Was Skeptical Until…” (anti-ad energy)

- 6) The Specific Outcome (results-focused)

- 7) “For People Who Hate ___” (identity + lifestyle)

- 8) “The Mistake You’re Making” (reframe)

- 9) Education / Explainer (value-first)

- 10) Format-Led Angles (skit, street interview, expert commentary)

- Iteration Playbook: How to Get 20 Variations From 1 Winner

- Common Testing Mistakes That Kill Winning Ads